10 Year Anniversary Workshop of NVIDIA Application Lab at Jülich

On June 21 and 22, we held a workshop looking back on the last ten years of our lab together with NVIDIA – the NVIDIA Application Lab at Jülich (or NVLab, as I sometimes abbreviate it). The material can be found in the agenda at Indico.

We invited a set of application owners with which we worked together during that time to present past developments, recent challenges, and future plans. On top of that, we had two other GPU-focused talks: Markus Hrywniak from NVIDIA gave a presentation about some distinct features of NVIDIA’s next-generation GPU (Hopper H100) and how they can be used for applications.

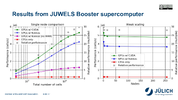

We invited a set of application owners with which we worked together during that time to present past developments, recent challenges, and future plans. On top of that, we had two other GPU-focused talks: Markus Hrywniak from NVIDIA gave a presentation about some distinct features of NVIDIA’s next-generation GPU (Hopper H100) and how they can be used for applications.  And Damian Alvarez presented the current state of JUWELS Booster and highlighted the work done in the lab to identify issues and shortcomings of the machine, seen and analyzed in close collaboration with specific users.

And Damian Alvarez presented the current state of JUWELS Booster and highlighted the work done in the lab to identify issues and shortcomings of the machine, seen and analyzed in close collaboration with specific users.

I also held a presentation – the opening presentation about all the things we did in the last ten years within the lab. Among other things, I counted 32 trainings held – with 11 additional trainings on conferences – and 18 workshops. I did not dare to count the optimized applications, in fear of forgetting one… Browsing through old material, I found a report about creation of the lab in the GCS InSiDE magazine Spring 2013 (link to PDF)1. An interesting snippet: “For many applications, using a single GPU is not sufficient, either because more computing power is required, or because the problem size is too large to fit into the memory of a single device. This forces application developers to not only consider parallelization at device level, but also to manage an additional level of parallelism.” – it seems to be a universal fact, still true today.

I also held a presentation – the opening presentation about all the things we did in the last ten years within the lab. Among other things, I counted 32 trainings held – with 11 additional trainings on conferences – and 18 workshops. I did not dare to count the optimized applications, in fear of forgetting one… Browsing through old material, I found a report about creation of the lab in the GCS InSiDE magazine Spring 2013 (link to PDF)1. An interesting snippet: “For many applications, using a single GPU is not sufficient, either because more computing power is required, or because the problem size is too large to fit into the memory of a single device. This forces application developers to not only consider parallelization at device level, but also to manage an additional level of parallelism.” – it seems to be a universal fact, still true today.

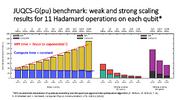

From application developers, we heard about quantum computer simulators – general simulators (Hans de Raedt) and simulators targeting specific aspects (Dennis Willsch) – which all have their own challenges, be it limited memory and extensive communication, or complicated communication patterns. Alexander Debus presented recent developments of PIConGPU, a plasma physics simulator capable to scale to various large machines (including JUWELS Booster, of course) by using many sophisticated abstractions under the hood. In two talks held virtually from North America, we heard about current work done in brain image classification (Christian Schiffer) and about simulations of polymeric systems (Ludwig Schneider).

From application developers, we heard about quantum computer simulators – general simulators (Hans de Raedt) and simulators targeting specific aspects (Dennis Willsch) – which all have their own challenges, be it limited memory and extensive communication, or complicated communication patterns. Alexander Debus presented recent developments of PIConGPU, a plasma physics simulator capable to scale to various large machines (including JUWELS Booster, of course) by using many sophisticated abstractions under the hood. In two talks held virtually from North America, we heard about current work done in brain image classification (Christian Schiffer) and about simulations of polymeric systems (Ludwig Schneider).  Christian presented a whole set of applications, which all work towards the goal of enabling semi-automatic, partly live classification of brain regions. Ludwig Schneider presented SOMA, which uses OpenACC for acceleration, and was recently augmented by guidance functionality through Machine Learning.

Christian presented a whole set of applications, which all work towards the goal of enabling semi-automatic, partly live classification of brain regions. Ludwig Schneider presented SOMA, which uses OpenACC for acceleration, and was recently augmented by guidance functionality through Machine Learning.  In a talk about our fresh2 OpenGPT-X project, Stefan Kesselheim highlighted the importance of large-scale language models and what exciting plans we have using JUWELS Booster for training open models.

In a talk about our fresh2 OpenGPT-X project, Stefan Kesselheim highlighted the importance of large-scale language models and what exciting plans we have using JUWELS Booster for training open models.

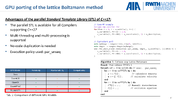

On the second day, a group of talks about weather and climate (W&C) simulations was started with a talk about MPTRAC by Lars Hoffmann. MPTRAC is another OpenACC application we worked on in the past, which was recently augmented with sophisticated ideas to deal with the large amount of input data. Another W&C code is MESSy – or rather a whole infrastructure for simulations – which we extensively work together with for quite some time now, but there are many pieces to this GPU puzzle, as shown in the talk by Kerstin Hartung.  Finally, our ParFlow GPU work was presented by Jaro Hokkannen, who now works at CSC in Finland, but was so kind to share his past developments remotely. ParFlow uses a custom, embedded DSL, to hide a specific backend behind pre-processor macros; with that, targeting different accelerators is comparable easy. Finally, two talks shared experiences with respect to handling Lattice-Boltzmann (LB) algorithms. For one, Fabio Schifano presented about D2Q37, an LB application which has a long history with GPUs, but ventures into FPGAs right now. Funnily, Fabio and the D2Q37 code were already part of the very first Kick-Off Workshop 10 years ago!

Finally, our ParFlow GPU work was presented by Jaro Hokkannen, who now works at CSC in Finland, but was so kind to share his past developments remotely. ParFlow uses a custom, embedded DSL, to hide a specific backend behind pre-processor macros; with that, targeting different accelerators is comparable easy. Finally, two talks shared experiences with respect to handling Lattice-Boltzmann (LB) algorithms. For one, Fabio Schifano presented about D2Q37, an LB application which has a long history with GPUs, but ventures into FPGAs right now. Funnily, Fabio and the D2Q37 code were already part of the very first Kick-Off Workshop 10 years ago!  And as the last presentation, we heard about M-AIA (previously known as ZFS) and the efforts to port the application to GPUs using the parallel STL by Miro Gondrum and Moritz Waldmann; it was quite interesting to hear their views on portability.

And as the last presentation, we heard about M-AIA (previously known as ZFS) and the efforts to port the application to GPUs using the parallel STL by Miro Gondrum and Moritz Waldmann; it was quite interesting to hear their views on portability.

All in all, it was an amazing workshop, seeing the fruits of many years of work on applications and how developers progressed after the various forms of collaborations we had with them.

Let’s do that again!

-

For the lab creation, also a press release was made. It contains a pretty cool picture, which Jiri Kraus (lab member of day 1) reminded me of. ↩

-

Actually not so fresh anymore. Time passes by so quickly! ↩