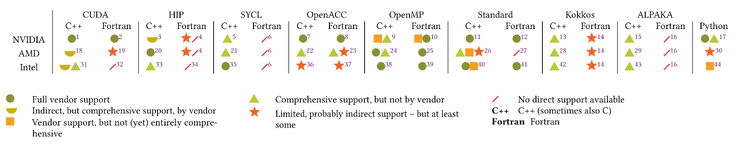

Paper: Many Cores, Many Models – GPU Programming Model vs. Vendor Compatibility Overview

In November of 2022, I created a table comparing GPU programming models and their support on GPUs of the three vendors (AMD, Intel, NVIDIA) for a talk. The audience liked it, so I beefed it up a little and posted it in this very blog.

People still liked it, so I took opportunity of some downtime to update and significantly extend it, and make it into a paper for the SC23 Workshop on Performance, Portability and Productivity in HPC (P3HPC). In November 2023, the paper was published as part of the SC23 Workshop Proceedings. Because it’s closed access, you can access it freely if you come from this blog1; alternatively, check out the preprint2.

I also created a dedicated Models page here on the blog, which has the content in HTML form – and also includes the link to the paper.

I’ve changed the following things, compared to the original blog post:

- Update all entries and check for additions, deprecations, and other developments; extend the descriptions

- Describe more properly the methods and categories

- Add references for each entry, usually referring to the tool or specific aspect discussed

- Add discussions and a conclusion

- Keep the GitHub repo as the single source of truth, but extend it to generate the PDF paper and to include references in the HTML version (through some horrible Pandoc trickery3)

As the paper is by no means finished but a mere snapshot of the status quo in November 2023, my mini-presentation at the P3HPC workshop (embedded below) asked for help from the community to keep the content up-to-date; in the form of contributions to the GitHub repository.

So, please help keep the content up-to-date! All included updates will be incorporated immediately into the Models page, the new home of the table. In case you ever forget, the table and repository are also linked from the P3HPC workshop website.

-

While I have you here, let me talk about TeX. I’ve created the PDF following the SC23 ACM template guidelines. The most elaborate TeXing is some TikZ sprinkled here and there. For the literature, I used BibLaTeX, as per ACM’s guidelines. Now, first, arXiv has issues with the two-column layout and stumbles over hyphenating words for line breaks. I could fix most, but not all. Then, for the actual publishing, turns out thath the publisher can not even accept BibLaTeX, but needs BibTeX. Because I use a lot of URLs and the appropriate fields in the bib file, I needed to rework this extensively – last-minute and with a less pleasing look. So while the arXiv version still contains BibLaTeX (but has hyphenation issues), the published version has BibTeX (but no hyphenation issues). A locally generated pre-preprint with proper hyphenation and bibliography can be found here for reference. Phew. ↩

-

References can be referred to by multiple entries, of course, so just adding a reference behind each explaining entry would create a lot of duplications. To resolve that, I basically concatenate all references in a long, Markdown-formatted string and convert it to HTML with Pandoc, letting Pandoc resolve the dependencies. I then parse the HTML string again to extract the references for each table entry in HTML form and add them to the output. ↩